Abstract

I created a reinforcement learning model with PyTorch to play a simplified version of the game Balatro. After refining the model architecture and reward shaping, I was unable to observe meaningful score improvements, and eventually decided to switch to a supervised learning approach. These trials were partially successful, and the agent ultimately managed to perform significantly better than random play, though still far below the mathematical optimum.

Key contributions

- Rebuilt a playable version of the underlying game from scratch.

- Designed and implemented model architecture, reward-shaping, training loop, and visualization tools.

- Implemented logging, reproducible runs, and evaluated model; researched and implemented abstract solutions and ablations.

- Created an oracle policy by computing the optimal action for sampled states under the simplified rule set, and used it to generate supervised training data.

Methods

The goal of this project was to investigate whether a machine learning model could predict the expected value of a given action in a simple, stochastic card game environment. This required mapping game states to specific actions under uncertainty from future draws.

The environment was implemented from scratch to simulate game dynamics and enforce legal actions. The game state was encoded as a fixed-length numerical vector describing the cards currently in hand and which of them were selected to be played. This representation was chosen to be compact while retaining only the necessary information for decision-making.

The initial goal was to succeed in creating a reinforcement learning model, but I was unable to observe any productive results with a DQN-style algorithm after tweaking the model's architecture and reward shaping in many different ways. I decided to experiment with supervised learning instead, and created an algorithm to generate training data; mathematically finding the optimal action given a random game state. The reinforcement learning model used gradient-based optimization to iteratively improve policy performance, while the supervised model used minibatch training.

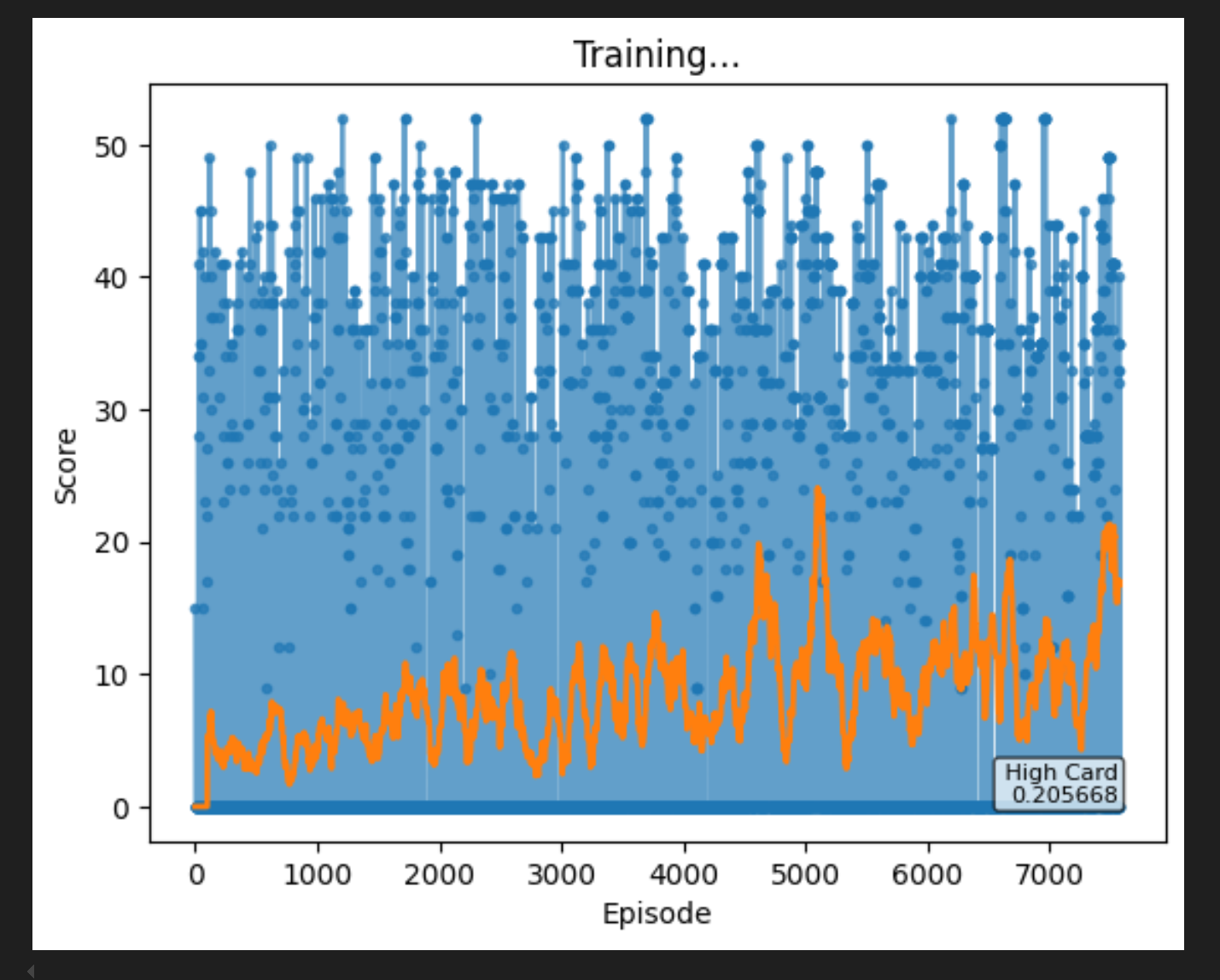

I evaluated training performance using the episodic average over time, comparing against random play and optimal play as baselines. I tracked learning stability, variance across runs, and sensitivity to hyperparameters such as learning rate and reward scaling.

Results / What I learned

Ultimately, the reinforcement learning model failed to demonstrate any substantial improvement, often performing below a random baseline. Training was unstable and sensitive to reward scaling, and learned policies often collapsed to degenerate action choices.

Conversely, the supervised learning model was able to surpass random play and learn significant rudimentary strategies; however, performance plateaued below optimal levels. Limitations included sparse reward signals, high variance in outcomes, and a noisy state representation. These results motivated further exploration of alternative representations and training strategies.

This project motivated me to learn about machine learning from the ground up in a hands-on, interactive environment. I strengthened my understanding of reward shaping, learning dynamics, and tackled the practical challenges of training agents in sparse-reward environments. It also underlined the importance of careful experimental design in computational research.